Brief. Bioinformatics | A novel convolutional layer with adaptive kernels from Gao Lab, Peking University

On July 6th, 2021, Gao Lab from Biomedical Pioneering Innovation Center (BIOPIC) and Beijing Advanced Innovation Center for Genomics (ICG) published a research article named Identifying complex motifs in massive omics data with a variable-convolutional layer in deep neural network on Briefings in Bioinformatics, proposing a novel convolutional layer for deep neural networks.

Deep learning, a subfield of “Machine Learning”, usually refers to a broader family of neural network-based methods aiming to “learning” proper representations for feature detection or classification from large-scale high-dimensional data automatically, like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs). Benefitting from rapid advances in computer processing capability, the widely usage of deep neural networks in image recognition/computer vision and natural language processing has led to impressive performance comparable to, and in some cases surpassing to, human experts. In particular, CNN, being capable of capturing local features, has been widely applied to various life science fields like omics sequences and bioimaging data analysis.

CNN recognizes recurrent fragments from input sequences with kernels from its convolutional layers and assembles them to discover sequence motifs. However, the canonical convolutional layer is restricted to kernels of predefined, fixed length, and is thus not well suited for discovering complex signal patterns in the massive omics data. A workaround is to overlay multiple convolutional layers with kernels of different sizes, yet this makes the model training harder due to an explosive growth in parameter space.

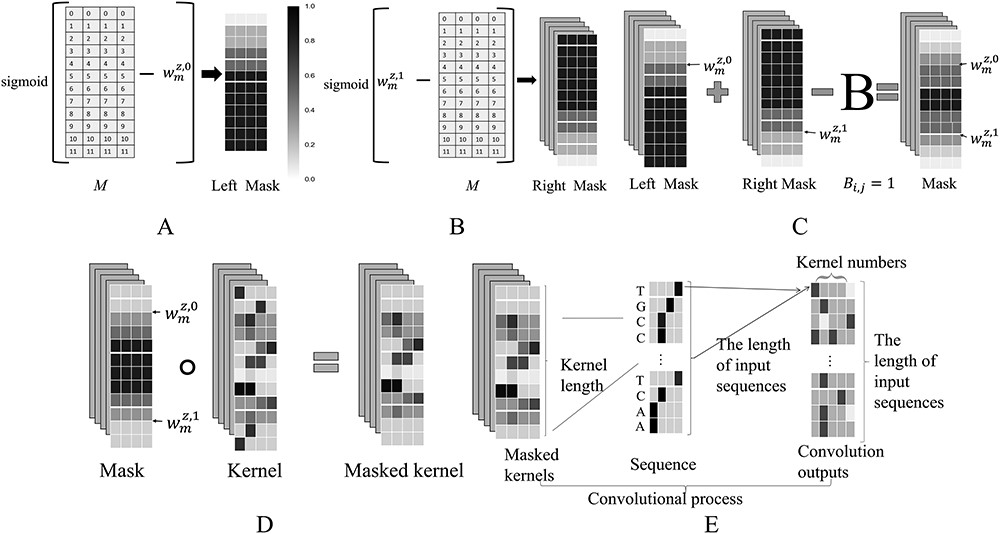

In this work, researchers introduced a novel convolutional-based layer, vConv, that self-adapts its kernel lengths during training. This is achieved by dynamically masking side elements of the kernel (and thus learning the effective kernel length on-the-fly) by multiplying two trainable S-curves of opposite orientation with the kernel (Fig. 1). Researchers have demonstrated that vConv-based neural networks can accurately identify and discover motifs from massive omic data of gigabytes, and also outperforms canonical tools and convolution-based neural network remarkably.

Figure 1. The design of vConv. To build up the mask matrix, vConv generates two sigmoid functions of opposite orientations (A and B), followed by overlaying them (C). Then vConv ‘masks’ this mask matrix onto the kernel using the Hadamard product (D) and treats the masked kernel as an ordinary kernel to convolve the input sequence (E).

vConv layer can be introduced to any multi-layer neural network, as a “drop-in” replacement of the canonical convolutional layer for application in multiple fields like data mining and image analysis. The code and tutorial for vConv are available on GitHub (https://github.com/gao-lab/vConv). We believe that the vConv, along with ePooling pooling layer published last year at Bioinformatics (doi: 10.1093/bioinformatics/btz768, https://github.com/gao-lab/ePooling), could offer a unique solution for scientists and engineers in omics on boosting existing convolution-based networks.

Dr. Ge Gao and his formal PhD student Dr. Yang Ding (now as a postdoctoral fellow at Beijing Institute of Radiation Medicine) led the research, with Mr. Jing-Yi Li, a PhD candidate and Mr. Shen Jin, a formal intern student (now as a Master in Computational Biology, Carnegie Mellon University) as co-first authors of the paper. Mr. Xin-Ming Tu, an undergrad student, made great contribution during the code testing and co-authored the manuscript. This research was supported by the Ministry of Science and Technology (MOST), Beijing Advanced Innovation Center for Genomics (ICG) as well as the State Key Laboratory of Protein and Plant Gene Research. Part of data analysis was performed at the High-Performance Computing Platform of Peking University.

Link:https://academic.oup.com/bib/advance-article/doi/10.1093/bib/bbab233/6312656